Experiments

The proposed method is evaluated on both synthetic and real deformable datasets to assess accuracy, drift, and robustness under increasing non-rigid motion. We report standard trajectory metrics (ATE/ARE/RPE) and the number of successfully tracked frames, and compare against ORB-SLAM3 and NR-SLAM.

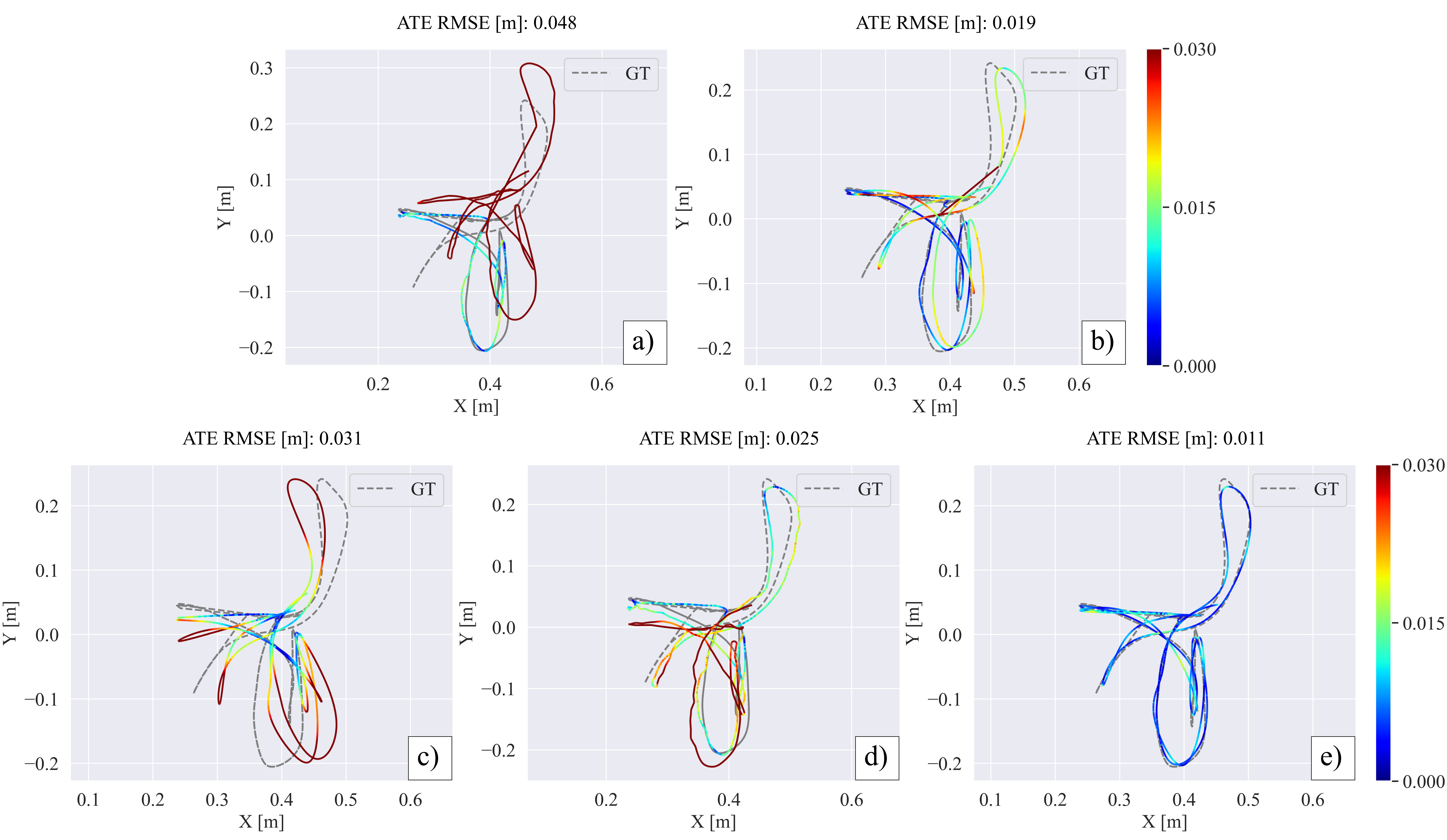

Figure 3. Qualitative trajectory comparison on sequence R4. Rigid VI SLAM (ORB-SLAM3) and non-rigid SLAM (NR-SLAM) may suffer tracking loss/relocalization under deformation; visual-only non-rigid variants can accumulate drift. Combining IMU anchoring with explicit non-rigid modeling (DefVINS full) improves global consistency. Dashed line: ground truth.

Synthetic evaluation. We first benchmark on the Drunkard’s Dataset, which provides 19 synthetic RGB-D sequences with full 3D ground truth and four deformation levels (from low to extreme). Since IMU readings are not provided, we generate temporally smooth inertial measurements by differentiating the ground-truth trajectories represented as B-splines. Results show that inertial sensing and explicit non-rigid regularization play complementary roles: inertial constraints mainly stabilize short-term motion (lower drift), while the non-rigid model is key to preserve global accuracy as deformation increases.

Observability study. To connect performance with estimator conditioning, we compute a numerical observability score from the stacked Jacobians over multiple keyframe pairs, using the ratio ρk = σmin / σmax. The analysis shows that inertial terms lift near-null modes associated with gravity and biases, while non-rigid regularization prevents deformation variables from absorbing rigid drift, yielding a well-conditioned problem with only a few frames.

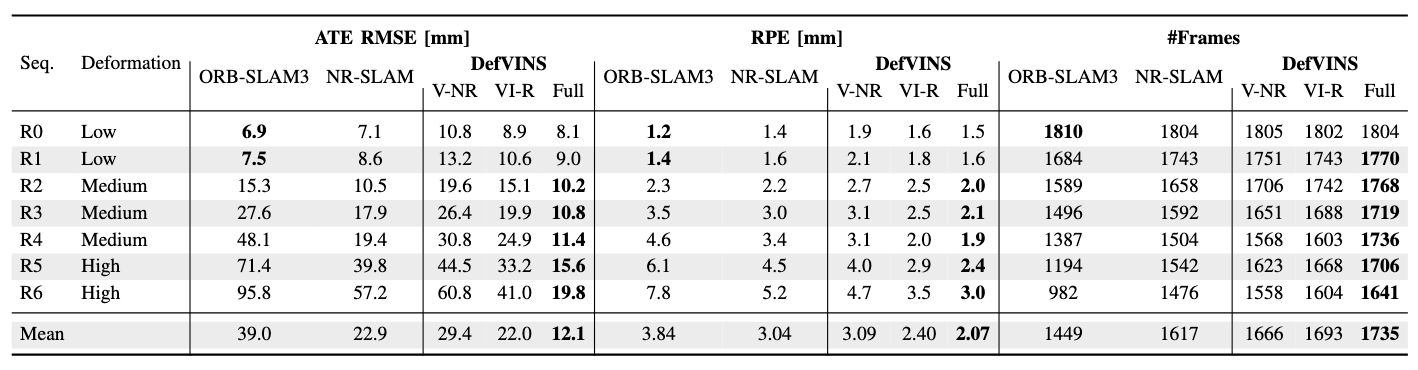

Real-world evaluation. We further validate on seven real RGB-D sequences (848×480) recorded in an industrial setup with synchronized cameras, IMU, and motion ground truth. The sequences (R0–R6) capture progressively stronger deformations of a textured mandala cloth, enabling a systematic stress test from near-rigid motion to severe non-rigid dynamics. Consistent with the synthetic study, rigid methods perform best in near-rigid regimes, while DefVINS becomes increasingly advantageous as deformation grows, improving both accuracy and tracking coverage under strong non-rigid motion.

Table 3. Comparison of visual–inertial odometry methods on real deformable sequences (ATE RMSE, translational RPE, and tracked frames). Best results per metric are highlighted; the last row reports the mean.

Conclusion

We introduced DefVINS, an observability-gated visual–inertial odometry framework for deformable scenes that separates a rigid, IMU-anchored state from a non-rigid deformation model represented by an embedded deformation graph. By progressively activating deformation degrees of freedom only when the estimation becomes well conditioned, the method avoids early over-fitting and catastrophic drift in low-excitation or highly deformable regimes.

Extensive evaluations on both synthetic and real datasets show that IMU anchoring and conditioning-aware deformation activation provide stable and accurate state estimation across a wide range of deformation levels, making DefVINS a reliable solution for visual–inertial odometry in the presence of non-rigid scene dynamics.

Dataset download

We release the dataset used in our experiments, consisting of seven real-world deformable sequences (R0–R6) with synchronized RGB-D images, IMU measurements, and ground-truth motion capture. Each sequence captures increasing levels of non-rigid deformation.