Existing datasets fail to include the specific challenges of two fields: multi-modality and sequentiality in SLAM or generalization across viewpoints and illumination in neural rendering. To bridge this gap, we introduce SLAM&Render, a novel dataset designed to explore the intersection of both domains. It comprises 40 sequences with synchronized RGB, depth, IMU and kinematic-related data. These sequences capture five distinct setups featuring consumer and industrial goods under four different lighting conditions, with separate training and test trajectories per scene, as well as object rearrangements.

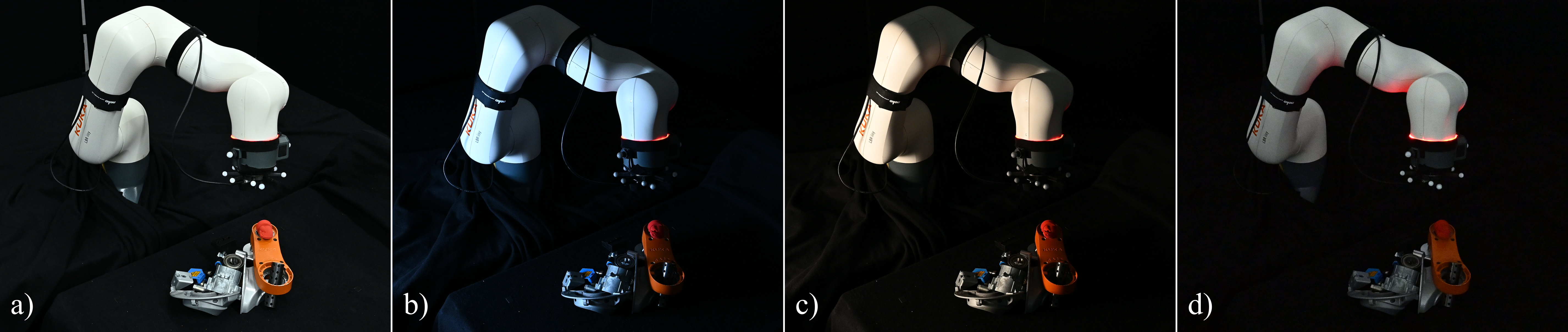

Illustration of our SLAM&Render dataset, captured from a camera in the end effector of a robotic arm, that moves around a set of objects on a table. See four different light conditions present in our dataset: (a)Natural (b)Cold (c)Warm (d)Dark.

Dataset Scenes

Our SLAM&Render dataset includes five distinct scenes, each designed to reflect different types of real-world environments:

- Setup 1 and Setup 2 feature common supermarket goods, including transparent objects.

- Setup 3 includes a different selection of supermarket goods (only opaque objects are present).

- Setup 4 consists of various industrial objects.

- Setup 5 presents industrial objects in a cluttered arrangement.

You can refer to the visual overview of all setups in the image below.

In the video below it can be seen the train trajectories over the mentioned scenes:

Illumination comparison

Below you can see four different light conditions present in our dataset: Natural - Cold - Warm - Dark.

Below you can better appreciate the difference between Cold and Warm lighting conditions. The tonality of the color that represents the sponge and the mate argentino varies depending on the lighting condition.

Dataset Structure

Every sequence contains the following:

rgb/: Folder containing the color images (PNGformat, 3 channels, 8 bits per channel), whose names are the corresponding Unix Epoch timestamp.depth/: Folder containing the depth images (PNGformat, 1 channel, 16 bits per channel, distance scaled by a factor of 1000), whose names are the corresponding Unix Epoch timestamp. The depth images are already aligned with the RGB sensor.associations.txt: File listing all the temporal associations between the RGB and depth images (format:rgb_timestamp rgb_file depth_timestamp depth_file).imu.txt: File with accelerometer and gyroscope readings, each paired with the corresponding Unix Epoch timestamp (format:timestamp ax ay az gx gy gz).groundtruth.txt: File containing the ground-truth of the camera poses w.r.t. world reference frame, expressed as a sequence of quaternions and translation vectors with the corresponding Unix Epoch timestamp (format:timestamp qx qy qz qw tx ty tz).groundtruth_raw.csv: File provided by the motion capture system containing the raw ground-truth data of the flange-marker-ring trajectory.extrinsics.yaml: File containing the transformation matrices of the reference frames involved in the data collection process.intrinsics.yaml: File containing the intrinsic calibration parameters of the camera.robot_data/flange_poses.txt: File containing the poses of the robot flange with the corresponding Unix Epoch timestamp. Each pose is expressed w.r.t. the robot base as a quaternion and translation vector (format:timestamp qx qy qz qw tx ty tz).robot_data/joint_positions.txt: File reporting the angles of each robot joint along with the corresponding Unix Epoch timestamp (format:timestamp q1 q2 q3 q4 q5 q6).